By Kevin Smith, CS Disco

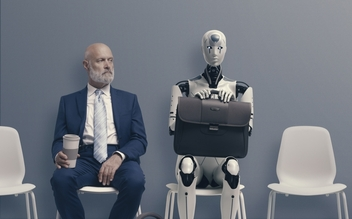

Artificial intelligence has reached an inflection point. No one can deny its potential, but many fear its power. Key considerations around data security and privacy, the future of work, and government regulation will be paramount for business leaders as they evaluate AI adoption within their organizations.

Artificial intelligence has reached an inflection point. No one can deny its potential, but many fear its power. Key considerations around data security and privacy, the future of work, and government regulation will be paramount for business leaders as they evaluate AI adoption within their organizations.

The harbinger of change, the launch of ChatGPT has led to a cultural phenomenon that has spurred a wealth of interest in developing AI. According to Swiss banking giant UBS, the generative AI application may have become one of the fastest-growing apps in history after it was estimated to have reached 100 million monthly active users. The introduction of this groundbreaking tool into the market combined with the growth of Large Language Model (LLM) solutions has provided enterprises with a new paradigm for how to evolve their day-to-day business processes, or at least the promise. This is a genie that is unlikely to go back in the bottle.

Yet as business leaders and global organizations are eagerly seeking to accelerate their AI adoption efforts, concerns about the data security, privacy, and AI “hallucinations,” and regulatory compliance remain top of mind. As enterprises seek to leverage the strengths of AI, they must also mitigate its risks.

Recently, there has been increasing scrutiny over how accurate and reliable ChatGPT’s intelligence is, adding another layer of complexity to the current AI boom. Intelligence might be the wrong way to think about what these models and derivative technologies do, but in this accelerated environment, it will be critical for organizations to carefully evaluate each new solution before integrating it into critical operations and processes.

The State of AI

While generative AI technology remains nascent, it’s poised to accelerate the maturity of capabilities around document parsing, code generation, and effective information extraction. Variations of AI are being adopted across industries, with the financial services sector leading the way due to the effectiveness of AI’s predictive algorithms in assessing, predicting and mitigating risks. Conversely, industries heavily reliant on non-quantifiable decision-making, such as marketing and manufacturing, have been much slower to embrace AI (Statista).

So how does Gen AI become enterprise ready? Much has been written and spoken about in conferences and sales pitches about its promise to fundamentally disrupt the enterprise workforce. It is true that this represents a generational agent for change, but it is not quite ready… yet.

OpenAI was sprung onto the world and the gears of imagination started spinning incredibly quickly due to getting a taste of this technology’s potential. But there are three core things every product person thinks about in terms of innovation: Product Market Fit - Does it do something really well in a way that has not been done before? Is it Secure - In today’s world there are many tests for this, including what happens with the data we share with these essentially OEM technologies. And lastly, have the innovators broken the context of the systems and tools used to do work or is the innovation elegantly embedded to enable management and quality control of the jobs they aim to support.

Generative AI is excellent at using the information it was trained on and the context that was provided to produce stunning synthesis of information and generation of novel content, whether code, responsive prose or general information structure. We have tested dozens of LLMs on different legal jobs and leveraged experts to assess whether the product of the LLMs has proven to be sufficiently good at the job that the solution would be hired to do, answering legally relevant questions, organizing complex legal documents into addressable frameworks among other tasks. This is just one sector and a few examples, but great enterprise organizations are not trying to deploy generative AI to be mostly okay substitutes for human processes, meaning there is still a lot of work to ensure that there is product market fit for the solutions that leverage these technologies.

There are other fit requirements that have not yet been met.. For example, if you need to leverage these technologies in a cost effective way to bring innovation to market and need high volumes of information processed performantly, then the current capacity limits of many LLMs may become an issue. GPU shortages have led to rationing for hungry LLMs as they try to keep up with demand and leave no user behind more than the next, which creates performance mismatches. A magical answer that needs to be submitted multiple times or that takes a rather long time to complete is not the magical experience that some expect.

Can we trust it? Data Security, Privacy and Regulation

Today, many of the Organizations without the appropriate safeguards can face immediate risks when using AI, as exemplified by the Samsung ChatGPT data leak. The inadvertent inclusion of company data to ChatGPT's training set highlights the need for careful consideration in deploying enterprise applications of ChatGPT and of AI in general. In a KPMG study, 81% of executive respondents considered cybersecurity as a primary concern with AI adoption, while 78% of executives saw data privacy as a primary concern. To avoid compromising privileged data, business leaders adopting AI technology for enterprise applications must establish appropriate guardrails for AI tools and for any data used in training sets.

Fabrication of evidence presents another worrisome risk, including the proliferation of “deepfake” photographs and video imagery. However, as falsified evidence will likely spur greater forensics involvement in legal review, faked photographs can wreak havoc in other areas as well. Consider the impact of a faked photograph of the Pentagon shared on social media earlier this year that caused a drop in stock prices before the error was widely known.

As AI manipulation becomes more sophisticated, businesses across all sectors must do their due diligence to conduct fact checks and verify their sources. In response to the rapid advancement of AI, the White House launched an initiative on “responsible AI”, addressing worker impact, employer use of surveillance technology, and regulatory standards. The evolving international regulatory landscape is something that enterprise adopters will need to keep a close eye on., as governments continue to standards and practices for protecting against anticipated data risks.

Use Cases for Generative AI in Sensitive Industries

Research from KPMG found that 65% of US executives believe generative AI and LLM solutions will have a very high impact on their organization in the next 3-5 years. However, 60% say that we are still potentially a few years away from actual implementation. While fullscale AI implementation may seem like a ways off, investing time and resources into understanding crucial business needs and capabilities will pay dividends in the long run.

Today, we’re already seeing the potential for generative AI to dramatically impact business use cases within highly regulated industries such as finance, healthcare and legal. In finance, AI can improve accuracy in forecasting , reduce errors, lower operational costs, and optimize decision making for organizations able to invest the time and resources for development (Gartner). In healthcare, it can support synthetic data generation for drug development, diagnostics, administrative tasks, (Goldman Sachs) streamlined procurement of medical supplies, and clinical decision-making, with the caveat that trustworthiness and validation are crucial in this context.

In the legal field, lawyers are cautious about AI adoption due to the importance of accuracy and data integrity during legal proceedings (Bloomberg Law). However, in the near future it will give lawyers powerful new capabilities they’ve never had before including comprehensive document parsing, code generation, information extraction, and improved natural language understanding, all of which will augment and optimize various workflows within the legal profession.

Many of the vendors in this space have started to tune their terms and technologies to be able to meet some of the data security and privacy concerns, but not all. There are approaches that companies can take to mitigate these risks as we have where possible, but the maturity of many rushing to participate is still nascent.

AI and the Future of Work - Management and Workflow

According to research from Goldman Sachs, AI could potentially impact as many as 300 million jobs globally over the next five to ten years. While warnings of job replacement may seem dire, historically advancements in technology have led to the creation of new jobs, as saved time and labor free up human talents for more creative endeavors. The use of AI technology could enhance labor productivity and contribute to global GDP growth of up to s 7% over time. In the United States, office and administrative support jobs have the highest automation potential at 46%, followed by 44% for legal work and 37% for tasks in architecture and engineering. However, the impact of AI on jobs will vary across industries.

If we look at jobs that can be impacted, one might suggest that the adoption is going to be as impactful as the management layer that exists to enable these technologies. Not all jobs have the same level of management requirements and as such different roles will be enterprise ready for Generative AI sooner than later. This means that if you are a marketing professional, you can obtain a draft of marketing collateral and then incorporate that into your normal human, word-based editing process before it is submitted for review, publication, etc. This is a standalone human process and requires very little integrated workflow. If you are a financial services firm using AI to analyze risk or provide investment recommendations, is it critical to be able to manage that information, but in provenance, accuracy and distribution (use) which requires generative AI implementation to be auditable in a system that can be easily managed.

There has been much debate around generative AI and its ability to change and improve the cost of legal outcomes. This fails to understand the system (technical and human) that governs the pursuit of outcomes like Justice or legal advice. How generative AI is implemented within the workflow of legal professionals is critical so that it can be trusted, examined and scaled across a diverse set of topics and context.

Conclusion

AI innovation stands at the threshold of immense possibilities, and has the potential to impact a myriad of aspects of society as we know it. While businesses may approach adoption cautiously, navigating risks and regulatory landscapes, the allure of gaining a competitive advantage will drive widespread adoption.

As enterprise enthusiasm continues to skyrocket, it becomes essential to balance excitement with a healthy amount of skepticism and healthy evaluation. Embracing the power of AI and shaping the future requires careful consideration of its limitations, potential risks and ethical implications.